What is CBRS and how can you use it to benefit your organization?

In 2017, the US Federal Communications Commission introduced a 150 MHz wide broadcast band called CBRS. Previously, this band was exclusively reserved for the US Navy radar and avionics systems, but now this band is part of the US government’s push towards the shared spectrum framework.

CBRS opens up a wide new range of possible innovations in the wireless communication space that weren’t traditionally accessible to companies. It is important to understand how the fundamental technology works before we discuss its potential use cases.

How can you use CBRS to benefit your organization?

The shared spectrum system that CBRS offers opens many doors to exciting new innovations. CBRS spectrums can be used to provide localized wireless broadband access in large buildings and businesses, allowing more bandwidth and range than any WiFi solution.

The major advantage CBRS offers is accessibility. The cost to entry is very high for spectrums that are exclusively licensed, and companies can end up paying billions of dollars. This makes wireless spectrums impossible for small to medium organizations to use. CBRS, on the other hand, is a free-to-use spectrum, similar to WiFi. You can pay for more exclusive benefits and a better experience, but the basic spectrum is publicly available to everyone.

Another great advantage that CBRS offers is its potential time to market. In a traditional spectrum management system, it can take almost a decade from the time a company bids on a wireless spectrum in an auction to when they are actually able to use it. In the tech industry, a decade might as well be a century. CBRS’s spectrum sharing means that once in place, it is very easy and almost instant for a new company to start using the common spectrum.

Finally, the practice of licensing exclusive spectrums was honestly unsustainable. There are so many free and unused spectrums available that can be assigned to new users. The frequency spectrum is a valuable and finite resource that would never be able to keep up with the growing demand. CBRS, on the other hand, allows multiple users on the same band, providing more room and accessibility to grow.

By combining all of these factors, CBRS makes way for new innovations and technologies that just weren’t possible before. Imagine a single central tower providing high-speed internet to a whole office campus.

The possibilities of CBRS are quite literally endless.

How can blockchain technology and CBRS be used together?

Blockchain technology has garnered significant popularity in the past few years, mostly due to its use in the field of cryptocurrency. This fame has resulted in accelerated research to figure out more use cases that can be built off of blockchain technology.

One particularly interesting use case combines blockchain and the CBRS spectrum sharing technology. This is especially useful for scenarios where a shared database needs write access from multiple writers. In a traditional system, there is an absence of trust between multiple writers, and it requires a lot of effort to consolidate a few parties.

In a blockchain-powered database, the process is more streamlined. Blockchain, by the nature of its underlying fundamental, works by being a ‘trustless network.’ A blockchain-powered database doesn’t trust one party over the other by default. Rather, it consolidates information from all parties involved to establish its ‘truth.’ This results in an atmosphere of disintermediation between various parties using the shared database.

For example, CBRS and blockchain technology can be used together in an inter-organizational recordkeeping capacity. The blockchain will be the highest authority in a transactional log to collect, record and notarize any information.

CBRS will empower network users to reap the benefits of blockchain-based databases and eliminate the need for third-party clearing houses for any sort of authentication and validation, using blockchain-powered smart contracts instead. This is especially useful for IoT devices that need to use shared databases, as they will then have access to a shared spectrum for faster and more reliable network access.

Blockchain technology, if integrated properly, has the potential to significantly reduce transaction costs in a CBRS by streamlining B2B multi-step workflows for things like contracting, brokering, and data exchange, since blockchain offers very low-cost transactions using smart contracts. Ultimately integration of blockchain in a spectrum management system will build trust between key stakeholders and devices using CBRS.

Please have a look at other articles on our blog, we’re always updating it with cutting edge information in the various markets we service.

Additionally, there has been an uptick in the use of Virtual Private Networks (VPNs) to allow personnel access to network resources when officials are away from the office and unable to access these networks directly. These VPNs are increasingly being used in both government and business, although there is also a growing interest in the ability to operate secure systems without using a VPN at all.

Additionally, there has been an uptick in the use of Virtual Private Networks (VPNs) to allow personnel access to network resources when officials are away from the office and unable to access these networks directly. These VPNs are increasingly being used in both government and business, although there is also a growing interest in the ability to operate secure systems without using a VPN at all. The Covid-19 pandemic has also pushed the demand for high speed Internet into overdrive. High-speed connectivity has emerged as a necessity and the promise of fifth-generation is delivering for business use cases that rely on the growing network of IoT technologies and data streaming. Progressive organizations that have embraced the combination of 5G connectivity and IoT technologies generating massive data sets in real-time, at higher velocity and across a growing network of nodes. The result are digital initiatives that promise intelligent, data-driven transformation, new operational workflows and unprecedented new business model innovations.

The Covid-19 pandemic has also pushed the demand for high speed Internet into overdrive. High-speed connectivity has emerged as a necessity and the promise of fifth-generation is delivering for business use cases that rely on the growing network of IoT technologies and data streaming. Progressive organizations that have embraced the combination of 5G connectivity and IoT technologies generating massive data sets in real-time, at higher velocity and across a growing network of nodes. The result are digital initiatives that promise intelligent, data-driven transformation, new operational workflows and unprecedented new business model innovations.

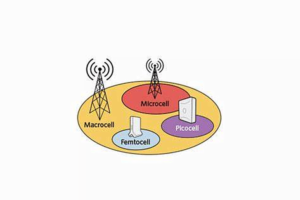

However, the look and location of these towers is changing. So, a better description for a cell tower is “transmission hub,” or hub for short. Increasingly municipalities are rejecting the look of giant antenna arrays.

However, the look and location of these towers is changing. So, a better description for a cell tower is “transmission hub,” or hub for short. Increasingly municipalities are rejecting the look of giant antenna arrays.

How quickly will NFV revolutionize the networks of the world? That remains to be seen. It’s being looked at as a potential framework for

How quickly will NFV revolutionize the networks of the world? That remains to be seen. It’s being looked at as a potential framework for

In order to enable these functionalities we are recently witnessing the rise and proliferation of IoT applications that take advantage of Artificial Intelligence and Smart Objects. Smart objects are characterized by their ability to execute application logic in a semi-autonomous fashion that is decoupled from the centralized cloud.

In order to enable these functionalities we are recently witnessing the rise and proliferation of IoT applications that take advantage of Artificial Intelligence and Smart Objects. Smart objects are characterized by their ability to execute application logic in a semi-autonomous fashion that is decoupled from the centralized cloud.